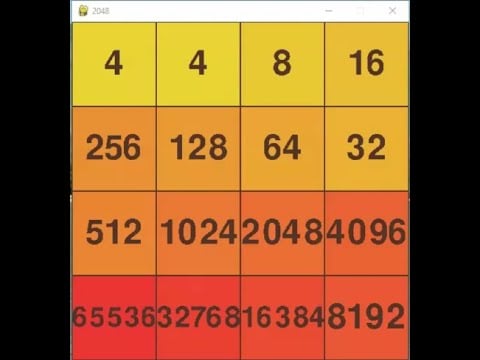

I’m working on building an AI bot for the 2048 puzzle game using the expectiminimax approach. The thing is, in 2048 there’s no actual opponent trying to minimize my score - it’s just me maximizing and random tile spawns.

Since there’s no true minimizing player in this game, I’m confused about whether alpha-beta pruning can even be used here. The game tree structure seems different from traditional two-player games where you have clear max and min nodes.

If alpha-beta pruning isn’t suitable for this situation, what other techniques can I use to cut down on unnecessary branch exploration and make the search more efficient?

Would really appreciate any advice or alternative optimization strategies for this type of single-player expectiminimax scenario.

You’re right that traditional alpha-beta doesn’t fit here. I ran into this exact issue when I built a 2048 solver a few years back.

What ended up working really well was star1 pruning (a variant designed for expectiminimax). It’s not as aggressive as alpha-beta but still cuts branches when you can prove they won’t affect the final decision.

Another trick that gave me huge performance gains was state space reduction. Instead of exploring every possible tile spawn, I grouped similar board positions and only computed expectimax for representative states. Saved probably 60% of my computation time.

Monte Carlo tree search is worth considering too. Sometimes it outperformed my expectiminimax approach entirely, especially when I needed faster move decisions.

The key insight I learned was that perfect play isn’t always necessary. Approximating the game tree intelligently often gives you 95% of the performance with 20% of the computational cost.

Traditional alpha-beta pruning doesn’t work effectively with expectiminimax in 2048 because the chance nodes break the alternating max-min structure that alpha-beta relies on. The expected value calculations at chance nodes mean you can’t simply propagate bounds upward like in standard minimax. However, you can still optimize your search significantly. I’ve had good results using depth-limited search with iterative deepening, focusing computational resources on the most promising branches first. Move ordering is crucial - prioritize moves that create merges or maintain corner strategies early in your search. Another technique that worked well in my implementation was using transposition tables to cache previously computed game states, since 2048 can reach identical board positions through different move sequences. You can also implement probability thresholds to prune chance nodes with very low probabilities that won’t significantly impact the final expected value. Consider using beam search or limiting the branching factor by only considering the most likely tile placements rather than exhaustively exploring every possible spawn location.

alpha-beta wont help much here since expectiminimax needs to calculate all branches at chance nodes anyway. ive found that pruning low-probability spawns (like ignoring 4-tiles when they’re <10% likely) gives decent speedup without hurting play quality too much.

Expectiminimax with standard alpha-beta is indeed problematic for 2048, but there’s a modification called expectiminimax that I implemented successfully. The trick is using bound propagation differently - instead of hard cutoffs, you establish confidence intervals around expected values. When I built my solver, I used a technique where branches get pruned only when their upper confidence bound falls below the current best move’s lower bound. This preserves the probabilistic nature while still eliminating clearly suboptimal paths. Branch and bound works better than traditional pruning here. I also implemented what I called “probability mass pruning” - once the cumulative probability of unexplored branches drops below a threshold (usually 5%), I stop expanding that node. The performance improvement was substantial, roughly 40% reduction in nodes explored compared to naive expectiminimax. Memory usage became the bigger constraint than computation time with this approach, so consider implementing a bounded transposition table if you go this route.

The fundamental issue you’re facing is that alpha-beta pruning requires strict bounds propagation through alternating layers, which breaks down when expectation nodes are involved. I discovered this when implementing my own 2048 bot last year. What I found most effective was implementing a hybrid approach using evaluation function caching combined with selective depth extension. Rather than pruning branches, I focused on smarter evaluation - precomputing heuristic values for common board patterns and using those to guide search priority. One technique that significantly improved performance was implementing what I called “confidence thresholds.” When the expected value difference between moves exceeded a certain margin, I would terminate deeper search on clearly inferior branches. This isn’t true pruning but achieves similar efficiency gains. Another optimization that helped was asymmetric search depth - spending more computational time on moves that maintain high-value tiles in corners versus moves that scatter them. The key insight is that 2048 has enough strategic structure that you don’t need to search every branch equally deep to make good decisions.