Hello! Any modern business owner knows the importance of SEO, and one of the best ways to improve it is by optimizing your pages and writing relevant articles! Today, we’re going to focus on the latter.

Now, in the age of AI, we have the opportunity to significantly reduce costs and speed up the process.

Let me show you how to build a simple scenario that generates high-quality SEO articles based on the top 5 search results from Google and adapts the content to suit your business (specific requirements or documentation):

Steps we’ll cover:

- Adding keywords and collecting links via google.serper.dev.

- Parsing text from each search result page using Headless Browser.

- Analyzing all texts and creating a new version (with an AI assistant trained on your business specifics/documentation).

- Saving two versions of the final result in Notion.

Step 1:

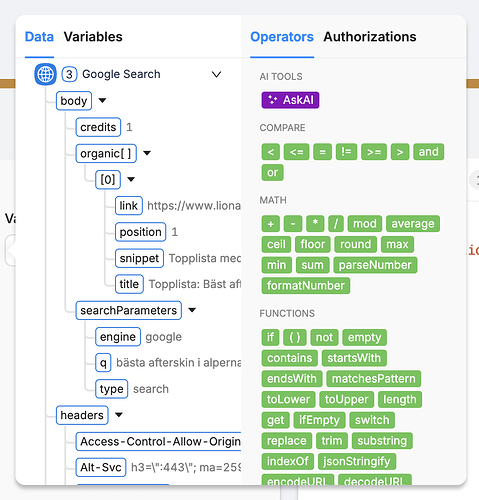

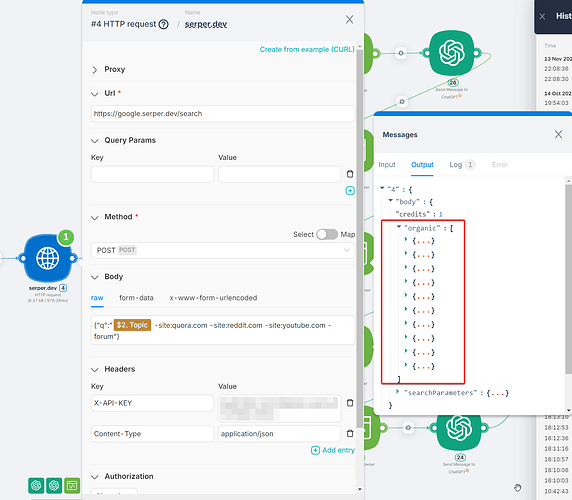

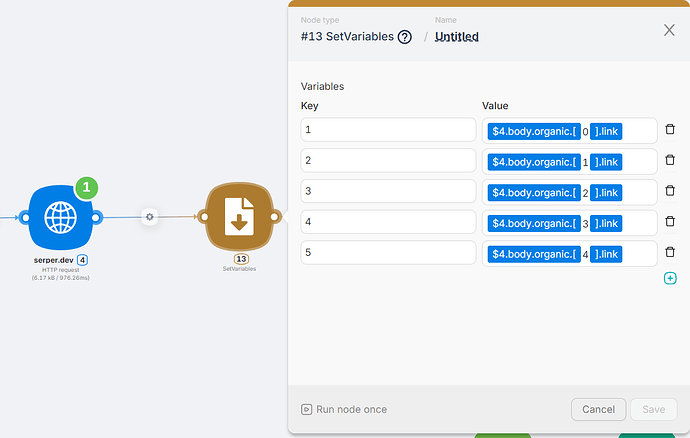

First, we need to set keywords as a variable, which will allow us to collect the relevant search results. We’ll use the serper.dev service for this—it conveniently parses the list of Google search queries. To exclude irrelevant sources like forums or video hosting sites, we’ll apply filters.

The response comes as an array, so in the next node (Set Variables), we create multiple variables, enabling us to work easily with each link.

Step 2:

To get the text from the pages, we’ll use Headless Browser. We’ll write simple code that retrieves all the text content from the page. Then, I’ll pass it to the AI, which will return only the article text without any clutter.

// Hardcode the URL from your object

const url = data["{{$13.1}}"];

console.log('Navigating to:', url); // Log the URL

// Navigate to the specified URL

await page.goto(url, { waitUntil: 'networkidle2' });

// Extract all visible text from the page

const markdown = await page.evaluate(() => {

// Function to filter only visible elements

function getVisibleTextFromElement(el) {

const style = window.getComputedStyle(el);

// Check if the element is visible and contains text

if (style && style.display !== 'none' && style.visibility !== 'hidden' && el.innerText) {

return el.innerText.trim();

}

return '';

}

// Extract text from all visible elements

const allTextElements = document.body.querySelectorAll('*');

let textContent = '';

allTextElements.forEach(el => {

const text = getVisibleTextFromElement(el);

if (text) {

textContent += `${text}\n\n`;

}

});

return textContent.trim();

});

// Return the result

return {

markdown

};

Since websites have different structures, this method is the most convenient because many sites have unique layouts, and I can’t predict where the blog content will be located.

Step 3:

After collecting the text from all the pages, we can pass it to our pre-configured GPT assistant (where we’ve already uploaded our documentation or outlined our business specifics).

AI assistants have an advantage over regular AI queries because they can be trained and tailored to your specific requirements, achieving perfect results.

Step 4:

Save the generated results. In this case, I create two versions: one is rephrased using Claude 3.5 because it offers more natural, human-like responses compared to ChatGPT. The second version is saved as-is, directly after being processed by the assistant.

Remember, when working with AI models, 90% of the outcome depends on your prompt. Don’t hesitate to experiment and tweak it!

In the end, you’ll get two high-quality articles on your chosen topic, both incorporating the most SEO-optimized content.

That’s it! You can build any level of complexity around this logic, such as generating content for multiple keyword queries or adding further processing steps.

If you have any questions, feel free to ask!