I’m working with LangSmith for tracking Claude AI interactions through OpenTelemetry integration. The tracing works well and captures most events, but I noticed it doesn’t seem to record the actual model responses that come back from Claude. I need to find a straightforward way to capture these responses and send them to LangSmith for complete monitoring. I know there are different LLM proxy solutions that can sit between my application and the model to capture both requests and responses, but I’m not sure which approach would be the simplest to set up. Has anyone found an easy solution for this? I want to make sure I can see the full conversation flow in my telemetry data.

I’ve been working on Claude response capture for monitoring and found a different approach that works well. Instead of just using decorators or callbacks, I built a custom wrapper class around the Claude API client that grabs both requests and responses before they reach my app logic. Gives me way more control over what actually gets logged to LangSmith. The wrapper inherits from the base Claude client and overrides methods like messages.create(). I make the original API call, grab the response, then use LangSmith’s log_run() to manually send the data. This worked better for me since I needed to filter out sensitive stuff before logging. Watch out for token counting and cost tracking when you’re capturing full responses though. The overhead adds up fast with high-volume inference. I ended up sampling responses instead of logging everything - keeps costs down while still giving good visibility into how the model’s behaving.

Had the exact same headache implementing Claude monitoring six months ago. OpenTelemetry’s response capture is pretty spotty. LangSmith’s Python SDK saved me - specifically their @traceable decorator. Just wrap your Claude API functions with it and set your LANGSMITH_API_KEY. It automatically grabs both prompts and full responses without any middleware hassle. Setup’s dead simple: pip install langsmith, import the decorator, slap it on your Claude functions. You’ll have complete conversation tracking in their dashboard within hours. I looked at proxy solutions too but they’re way overkill for basic logging. The SDK approach has been bulletproof in production and lets you control exactly what gets tracked.

Had this exact problem last year with our Claude monitoring setup. Only getting half the data was driving me nuts.

Easiest fix? LangChain’s callback handlers. If you’re already using LangChain with Claude, just add the LangSmithCallbackHandler to your chain or model calls. It grabs both prompts and full responses automatically - no proxy needed.

Not using LangChain? The LangSmith SDK has a decorator that wraps your Claude API calls and captures everything. Takes maybe 5 minutes to set up.

I messed around with proxy solutions first but they just added complexity we didn’t need. Direct integration is way cleaner and you don’t have to babysit another service.

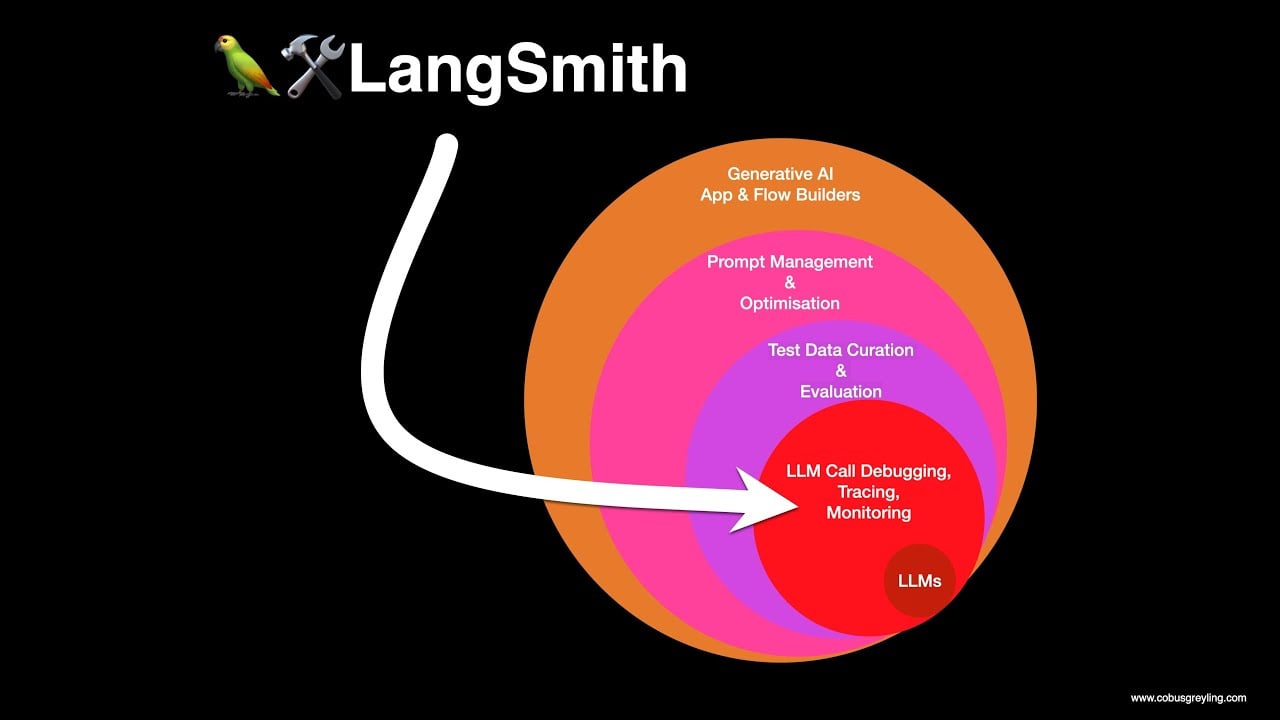

This video shows the whole LangSmith setup if you want to see it working:

Once it’s running, you’ll see complete request/response pairs in your dashboard. Debugging becomes so much easier.

Yeah, partial telemetry data is useless for debugging conversation flows.

Ditch the manual wrapper classes and decorators. They’re a pain to maintain and you’re still doing all the LangSmith integration work yourself.

I use Latenode for this. Set up a workflow between your app and Claude. Your app hits the Latenode webhook, it forwards to Claude, grabs the full response, sends data to LangSmith and returns the response to your app.

Best part? Zero code changes in your main app. No SDK dependencies, no decorators, no custom classes. Just point your Claude endpoint URL at your Latenode workflow.

Built something like this for production monitoring last month. The workflow handles LangSmith formatting, token counting, and smart sampling based on response size. Takes 10 minutes vs hours of custom integration.

You can easily add other monitoring tools later without touching your app code again.

Been running Claude monitoring in production for 3 years. Most solutions break when you scale.

The problem? OpenTelemetry auto-instrumentation doesn’t capture response bodies by default. It’s built for HTTP headers and metadata, not full LLM responses.

What works long term? Manual instrumentation with LangSmith SDK, but do it right. Don’t wrap individual API calls or scatter decorators everywhere.

Build one monitoring service that handles all LLM interactions. Route everything through it. This service makes Claude calls, captures full request/response pairs, and batches sends to LangSmith.

Learned this the hard way after decorators across 20+ files became a debugging nightmare. One centralized service = consistent logging, easier sampling rules, and you can swap monitoring backends without hunting down decorators.

Batch sending is crucial. LangSmith chokes on high volume real-time logging. Buffer responses and send every 30 seconds. Keeps your main app fast.

just use litellm proxy - it’s built exactly for this. spins up a local proxy between your app and claude, automatically captures everything and pushes to langsmith. no code changes needed. way easier than building custom wrappers or decorators.